Recently I attended the two-day Deep Learning Summit in London, hosted by Re-Work. As someone who is still relatively new to the deep learning community, I felt a little apprehensive before attending, but these fears were quickly alleviated by the very high quality of the talks that were split into digestible chunks of 20 minutes. Each talk gave a good taster either on what the individual was researching or what their company did.

There was a large variety of talks from established companies such as Google’s Deep Mind, Facebook and Amazon, and from small start-ups, under a year old, such as Echobox and UIzard, showcasing the interesting and diverse areas to which deep neural networks can be applied.

The event was very well organized. Re-Work grouped related talks together, so each talk was able to build on talks before it, to allow engagement of one topic at a time. There were talks about research and application of neural networks; some went into detail, and others gave more of a general overview about the application of deep learning.

I found Bayesian Neural Networks particularly interesting. This is where instead of having a single weight value, it is a distribution, allowing you to utilise the uncertainty around the value. I also found encoder/decoder neural networks fascinating.

I would go again next year as deep learning is a rapidly-moving field.

Here is a summary of the talks at the event:

Probability & Uncertainty in Deep Learning, Andreas Damianou, Amazon

This talk covered how Neural Network are deterministic. They are have generalisation, data generation and no predictive uncertainty. Bayesian Neural Networks were introduced here, where instead of single values for weight, a distribution is used. By using a distribution, you can use all the available information instead of just mode (single value) for propagation. This is an example of a Deep Gausian Processes, however it is more challenging when the uncertainty has to be propagated

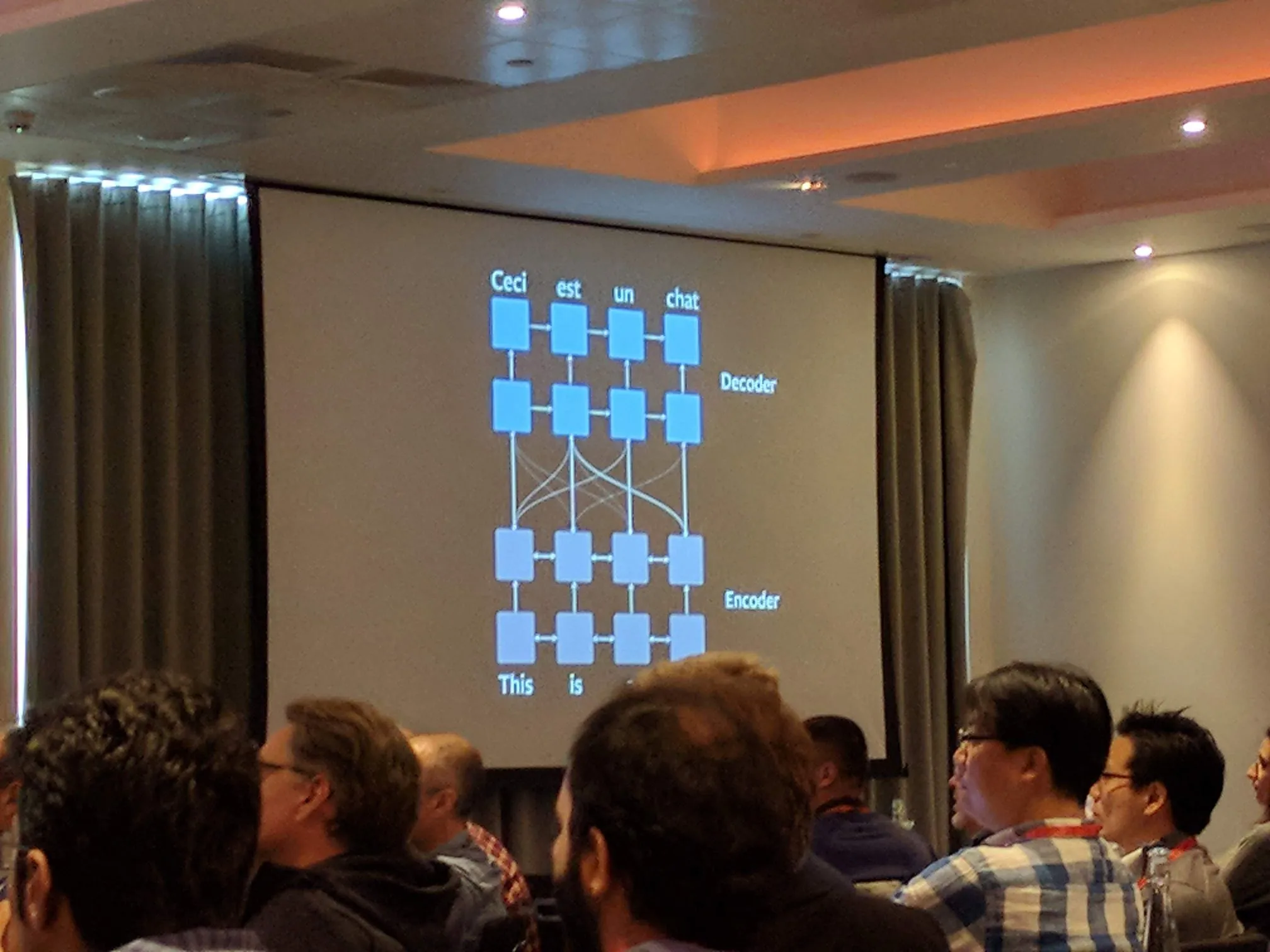

Sequence to Sequence Networks for Speech & Language Translation, Shubho Sengupta, Facebook AI Research (FAIR)

Translation is the single biggest network for any company. Siri and Alexa are examples of code to speech. They are supervised trained neural networks and require lots of data, 20-50 million translations to get accurate speech. Five years ago this was improbable.

The Deep Neural Networks used are encoder-decoder networks to build something similar to Babelfish from Hitchhiker’s Guide to the Galaxy. The encoder takes words over a time period. The decoder is a neural network to return the answer to the question or the translation.

The questions to now answer are:

-

What weights are given to each word in sentence?

-

What weights are given to the most important words? These questions are asking whether the the word in terms of context or the word in terms of relaying an emotion should have a higher weight, and how is this decided.

Memory and memory differentiation can be used to remember the previous word and what comes next from the memory in the network. These deep neural networks are difficult owing to the number of parameters. Speech to text has 50 million parameters and language to text 40-100 million parameters. This requires high-performance GPU computing.

Considerations for Multi GPU Deep Learning Model Training, Jonas Lööf, NVIDIA

Complex neural networks have more parameters. Google translation, for example, uses 8700 million parameters and 100 ExaFlops Therefore, they use multiple GPUs for training. The best practice for multiple GPUs is to optimize for 1 GPU first.

Hardware: NVLinks for fast communication, DJX1 for scaling and performance. Software: Frameworks (Tensorflow etc), Environments (NVIDIA)

For Frameworks, Caffe has built in multiple GPUs and is easy to configure, PyTorch has high-level interface for parallelisation across multiple GPUs but the most suitable framework for multiple GPUs is MxNet which is as flexible as Tensorflow but still has parallelisation across multiple GPUs.

Representations for Deep Learning, Marta Garnelo, DeepMind

Constrained Models are used to get good representations of the data. The most popular types of models are Reinforcement Learning and Object Oriented Learning. Individually these are not very efficient, need a high level cognition, and neutral networks are in essence black boxes, so it is hard to know what is happening inside them.

Word Embeddings in Search, Fabrizio Silvestri , Facebook

Search uses One-Hot encoding, translating the word to a vector that can be input into a deep neural network. In search sessions, we extract info and use them to build the representation

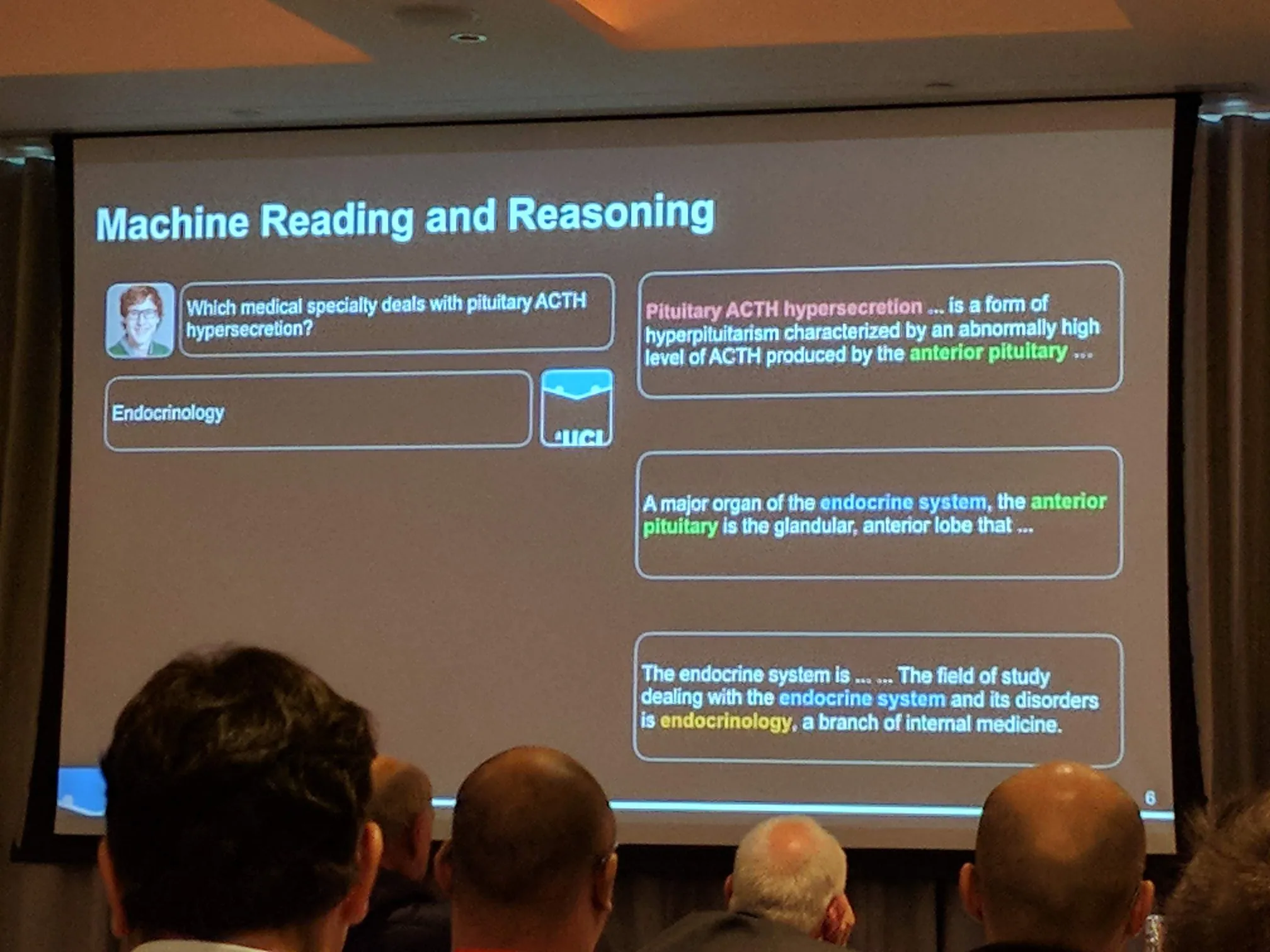

Towards Teaching Machines to Read and Reason, Sebastian Riedel, UCL / Bloomsbury.ai

The purpose of the neural network is to read a document and answer questions about the text. The same model can read both structured models and semi-structured models. It is also able to extract information from the paragraph to do simple maths and also follow links within the paragraph to find the right answer.

The neural network has end-to-end differentiation readers and program interpreters. As the neural network only has downstream annotation, it is not expensive to run, the learnt models are interpretable and you can allow injection of prior knowledge into the neural network to make it that much more accurate.

Labelling Topics Using Neural Networks, Nikolaos Aletras, Amazon

Topic models are used to label the training data set. A neural network can learn a set of topics. Document topic allocations have multinomial distributions with a probability of each occurring. The label types can be text or images. To evaluate, there is either an average rating that is human-assigned which the neural network has to try and replicate, or Normalised Discounted Cumulative Gain.

Opinion Mining Using Heterogeneous Online Data, Elena Kochkina, University of Warwick

In this talk they used a trained neural network on social media to see how rumours spread online quickly, through Twitter. We can classify a user’s stance on a rumour using a stance classifier. A user can either support, deny, question or comment on a rumour, and scepticism towards a rumour implies it is fake. There is a conservation structure in verification of the rumour: the output. The neural network can also do sentiment analysis, identifying the emotions of document which can be positive, negative or neutral. The neural network can discover the sentiment towards the target of a sentence. Heterogeneous data from mobile phones - such as social media, messages, mobile data and calls - can serve well-being.

Applications of DL: Anomaly Detection, Sentiment Classification, & Over-sampling, Noura Al Moubayed, Durham University

A deep neural network can be used in anomaly detection for cybersecurity, the cloud and social media. Tokenisation and topic modeling is used for the unsupervised sorting of anomalies into topics. Data is not abundant but the neural network is easy to train and it is fast.

Sentiment classification is included where the neural network is trained on positive statements only and then negative statements only.

If more samples are needed, the feedforward network generates samples from prior samples and calculates new ones. Backpropagation is used for optimization.

Application of this neural network are social robots to help autistic children deal with uncertainty.

One Perceptron to Rule Them All, Xavier Giro-i-Nieto, Universitat Politècnica de Catalunya

When we build neural networks, each layer uses a Perceptron.

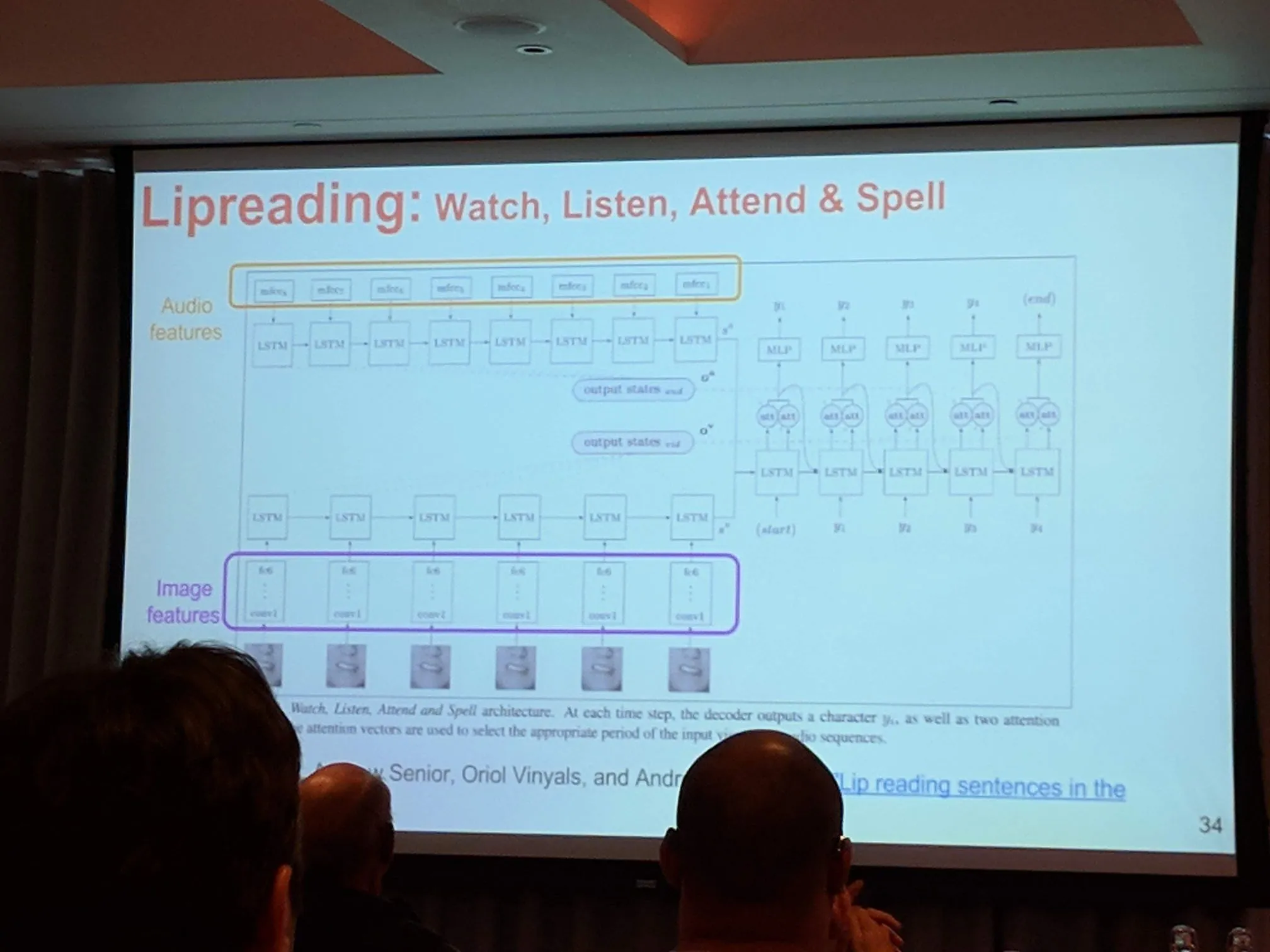

A Perceptron-based neural network is used in Language translation, pix2pix (add color, build images), Adversarial Training, Image-to-Word, Image-to-Caption, Visual Question Answering, Lip reading, Cross Modal: Image\<→Text and Pics2recipe.

Iterative Approach To Improving Sample Generation, Antonia Creswell, Imperial College London

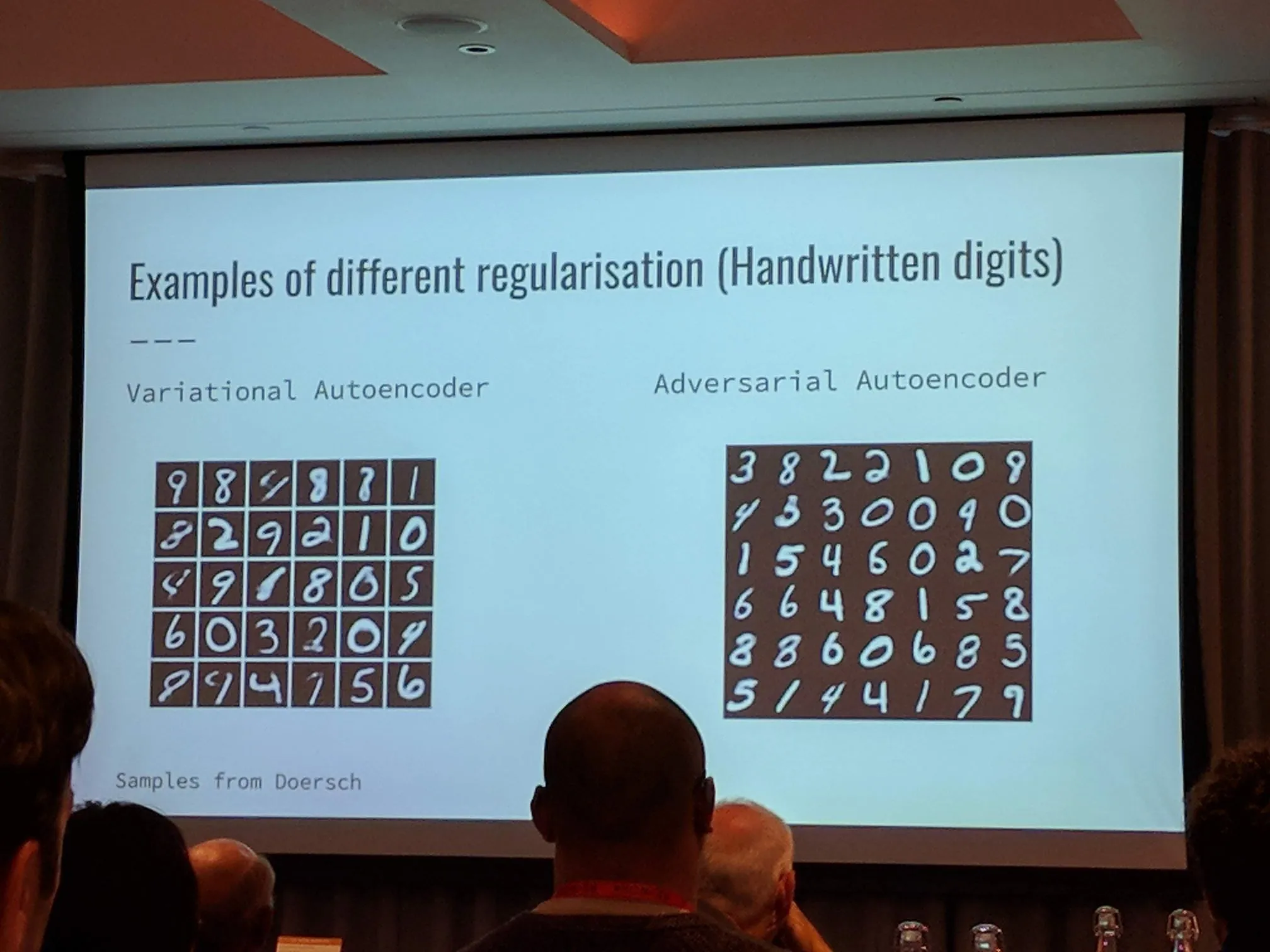

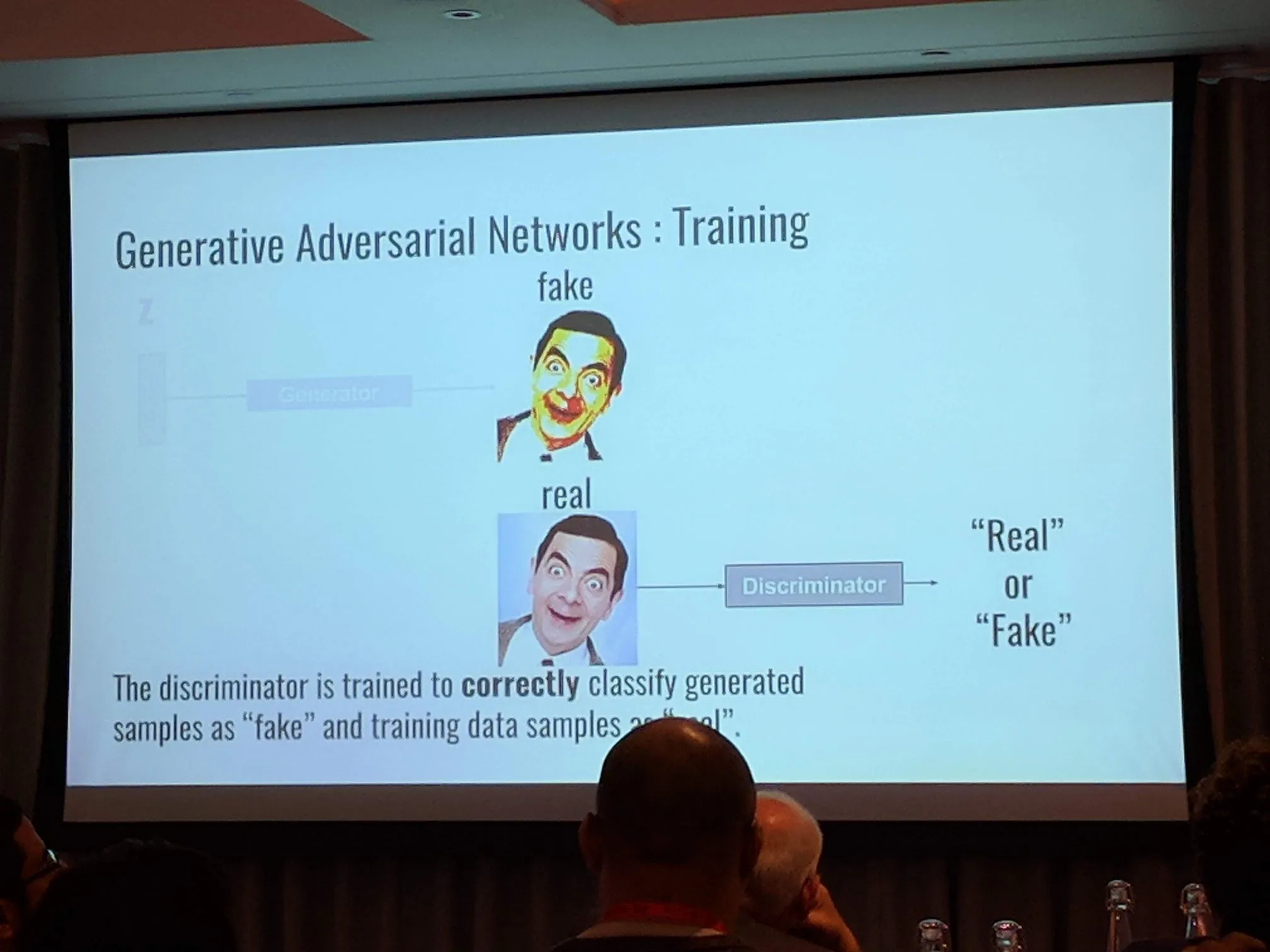

For small intial data sets, models can synthesise new data samples by augmenting the training data and imagining new concepts to better understand data. There are two possible methods: generative autoencoding and generative adversials networks We need at least 100k training samples that don’t need to be labelled.

The neural network layout is the following: Image -> Encoder -> Regularisation/Deep Net -> Decoder -> Image Variation

These types of neural networks are called Generated Augmented Network, where a discriminator can decide if the data has been generated or is real data.

Discovering & Synthesizing Novel Concepts With Minimal Supervision, Zeynep Akata, University of Amsterdam

A neural network that can produce Side Information by adding attributes to class data, such as color, size or key features, using Zero shot learning.

Cooperative AI for Critical Application, Fangde Liu, Imperial College London

An AI has many applications in industries such as traffic, energy, finance and healthcare. However AI in healthcare, right now, is like a baby who needs to go to school. Autonomous surgery was shutdown by the FDA after just one day. Currently TensorLab is doing AI vigilance.

Artificial Intelligence for Space-Based Technology, Luca Perletta, DigitalGlobe

Satellites gather 70 terrabytes of data per day and put the data on the Cloud. Deep learning is then used to extract information at scale. We can understand global trends such as understanding where cars are parked to inform plans on where to put a new car park. Data such as how many cars are produced can be used by hedge funds to predict profits for car manufacturers, oil production, where ships or planes are, continental scale maps and flood-water classification.

A deep core framework is applied to the satellite imagery.

With all the data, could an AI predict wealth from space?

Digital Globe is launching satellites so they can have images of anywhere in the world, every 20 minutes.

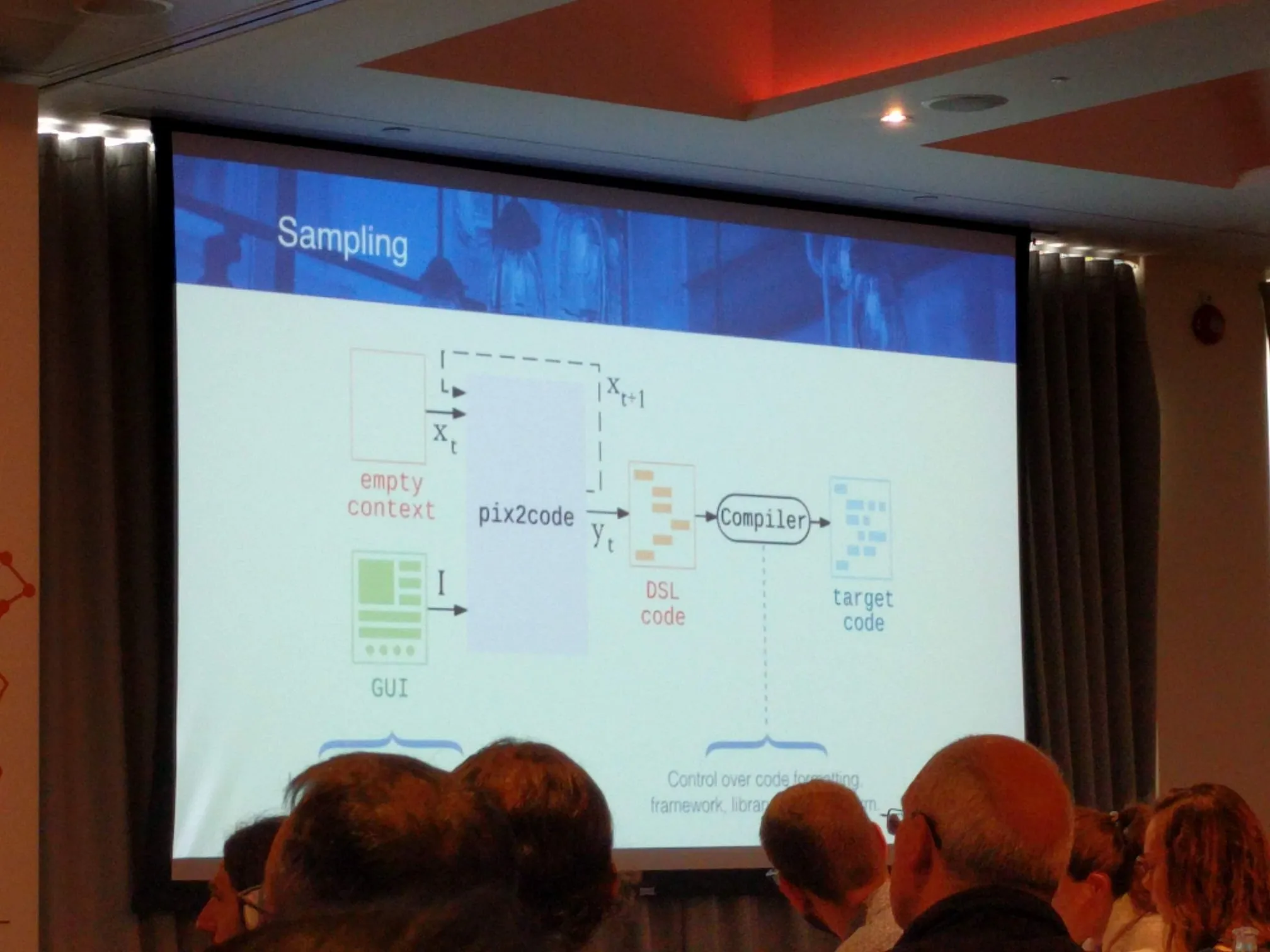

Towards Automatic Front-end Development With Deep Learning, Tony Beltramelli, uizard.io

UIzard’s deep neural network changes human designs to their own. They designed a domain-specific language which compiles to HTML/CSS or XML etc. A pre-trained neural network recognizes any objects (cars, trees, shapes) in the design. A Pix2Code recurrent neural network is used as it has the property of being end-to-end differentiable, which has advantages with accuracy.

The benefit of this model is that there is no manually-labelled data and a limitless amount of sample data by taking screenshots of online webpages

Bayesian DL for Accurate Characterisation of Uncertainties in Time Series Analysis, Christopher Bonnett, alpha-i

Deep neural networks have limitations such as static information sets, a model that doesn’t know what it doesn’t know and needing a lot of human involvement to optimize.

Bayesian Deep Learning has uncertainty on each end, i.e. the result is uncertainty. Outside the range of training data, a Bayesian deep learning neural network gives uncertain results and effectively says “I don’t know. Get more data here”. This gives a very risk-averse neural network. However, it comes with more computational overhead.

Using Deep Learning to Understand the Meaning of Content, Antoine Amann, Echobox

At EchoBox they are using AI to auto-generate content (the image and relevant text) on social media, at the correct time. It is used by the Guardian and New Scientist to generate tweets or Facebook messages to promote their articles. Their deep learning neural networks also predict the virality of the articles.

The neural network understands the meaning of content of the article and the audiences that read them. Therefore, each client has their own model based on the articles they write.

They used data from who was reading what articles to predict elections. They correctly predicted French elections through both rounds, minute-by-minute, based on which articles people were looking at in France. They are currently doing the same with the German election.

AI that can Compose Original Music, Ed Newton-Rex , Jukedeck

An AI that uses deep neural network over rule-based systems, markov chains, evolutionary algorithms, which are viable alternatives is Midi, which trains on notes and chords to produce new music.

You can try it here to create your own piece of music with deep learning, (navigate to “Make” through the link).

End-to-End Deep Learning for Detection, Prevention, & Classification of Cyber Attacks, Eli David, Deep Instinct

Currently, most new malware is simply old malware that has been slightly altered to become undetectable. In this instance, cybersecurity is a reactive rather than a proactive industry.

Raw data is converted into linear features and input in the supervised deep neural network as raw binary values. Conventional methods are not reliable due to large data sets and complex data.

The neural network works on both mobiles and endpoints - computers. A trained neural network goes onto the device and scans new files. It is also connectionless and only has to be updated every three to four months as the deep neural network is trained on mutated malware. This means it knows how to handle malware that it has never seen before.

You could argue that this neural network can be used to create new malware.

The Quest for Understanding Real World With Synthetic Data, Ankur Handa, OpenAI

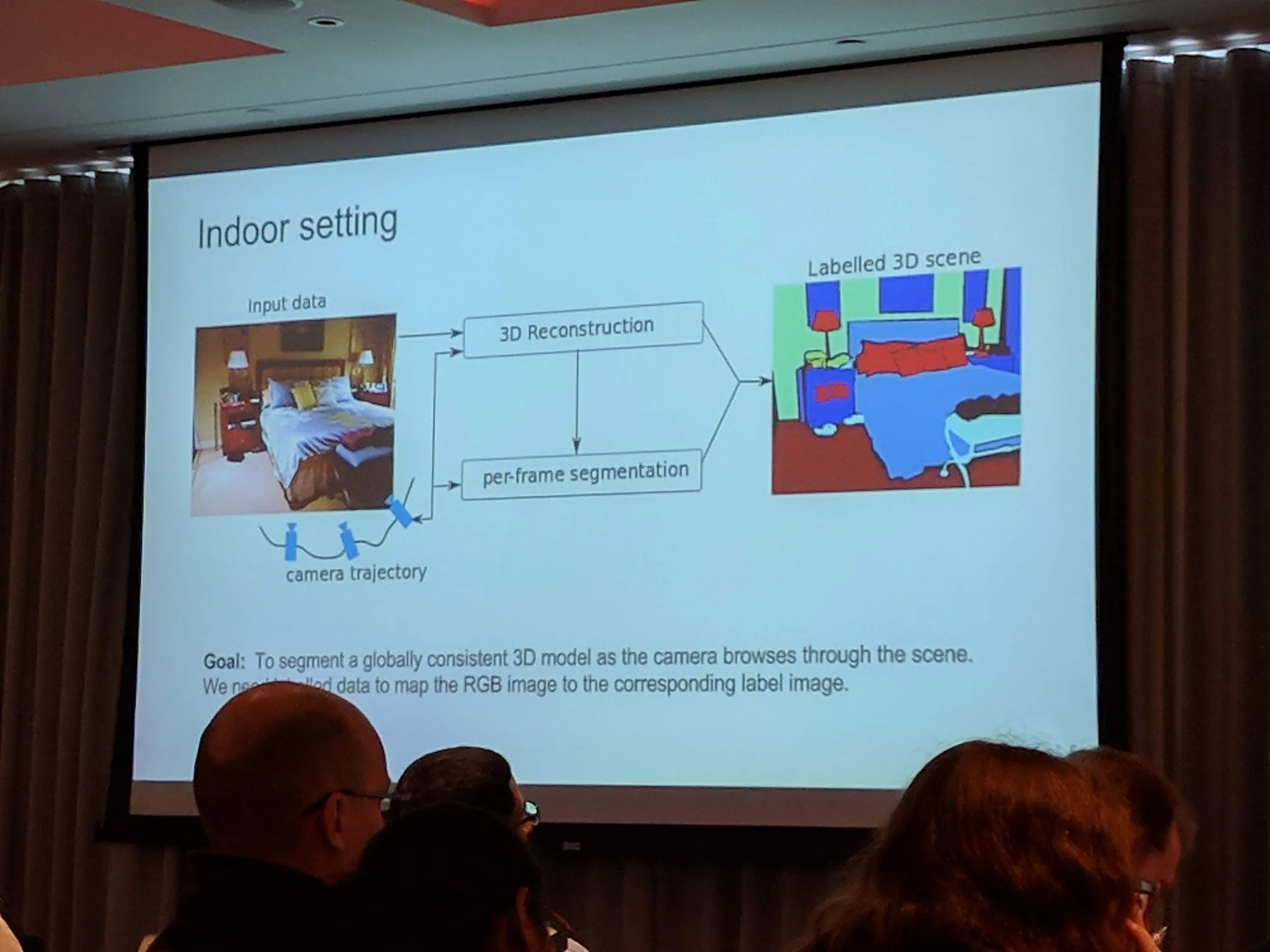

A 3D model is built through a connected camera. However, it cannot recognize the individual objects in the real work. What the neural network really wants is a labelled 3D scene. Geometric data, such as depth, height and the angle of gravity, and graphical data such as color (RGB) is collected.

Therefore, we have automatic interior design generation under constraints eg.. no table between sofa and TV.

Memory & Rapid Adaption in Generative Models, Jörg Bornschein, DeepMind

Supervised tasks have high-dimensional inputs but low-dimensional outputs

There are three approaches for better outputs:

-

Autoregressive: generate 1 pixel at a time

-

Adversarial: Noise -> generator neural network -> discriminator (decides real or fake) Want to fool discriminator. Discriminator trained to find real image

-

Direct Latent Variable: Decode random noise to observed data

Additonally there is Memory Augmentation, where the memory contains examples from the training set.

What Would it Take to Train an Agent to Play with a Shape-Sorter?, Feryal Behbahani , Imperial College London

To train, Deep Reinforcement Learning is used. The observation is passed into the neural network to produce an output. The environment for the test is a simulator of an arm putting objects in the correct places. The agent is the deep neural network collecting observations from the simulator.

The training is done by an Asynchronous Advantage Actor-Critic. The actor being the neural network which moves arm in the simulation. The critic sees the results and judges the value.

Possible solutions to this problem are learning with auxiliary information (e.g. depth), separate learning vision from control or learn from demonstrations, which is supervised learning.

Artificial Intelligence Driving the Future of Connected Cars, Maggie Mhanna, Renault Digital

AI can be used in the prediction of battery performance in electric cars. AI can detect driving data such as mileage, charge, voltage and warranty. It could also predict future failure of the battery.

Balance, Bias & Size: Unlocking the Potential of Retrospective Clinical Data for Healthcare Applications, Timor Kadir, Optellum

An AI can be used to look at pictures of lungs for lung cancer management. However, if there is a bias in the retrospective data sets then it affects the accuracy of the neural network.

The machine learning system is trained on medical images. It takes the image, puts it through the machine learning network and applies regression with the output being a risk score.

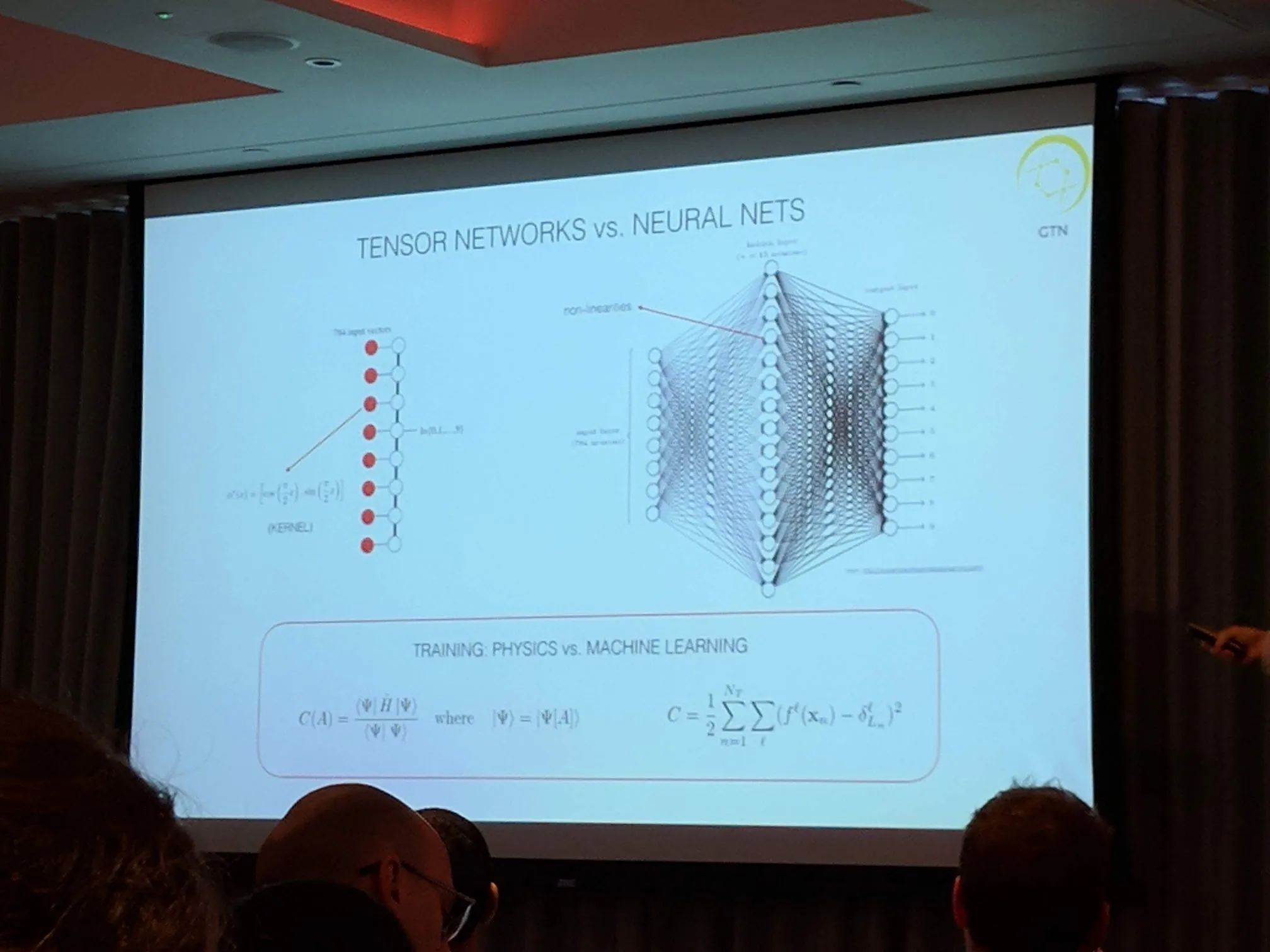

Drug Discovery Disrupted: Quantum Physics Meets Machine Learning, Noor Shaker, GTN

Schrodinger’s Equation is used to apply machine learning systems on physical systems. In quantum mechanics, states relate to vectors which can then be input into the machine learning system.